#digital-ocean

PHP is an interesting language, and to many it is considered a language that is archaic and badly designed. In fact, I largely agree that PHP's design is not optimal, but there is no other language in the world that is both easy to learn and deployable on almost any shared hosting service so easily. This is changing, but for now, PHP is here to stay.

By design, PHP does not have explicit typing-- a variable can be any type, and can change to any type at any time. This is in stark contrast to other languages, such as Apple's Swift, Java, and many others. Depending on your background, you may consider PHP's lack of explicit typing to be dangerous.

Not only this, but PHP is not the most performant language by any means. You can see this for yourself in TechEmpower's famous framework benchmarks. These results clearly show that PHP is at or near the bottom of the pile, being beat outright by languages such as Java and Go.

So, how do you make one of the most popular languages in the world for web applications usable again? Many say that PHP simply needs to be killed off entirely, but Facebook disagrees.

HHVM is a project designed to revitalize PHP. HHVM often beats PHP in performance benchmarks, and supports a new explicitly typed language-- Hack. Hack, sometimes referred to as "Hacklang" so that it you can actually search for it on Google, is almost completely compatible with standard PHP. With the exception of a few quarks, any PHP file can also be a valid Hack file. From there, you can take advantage of new features such as explicit typing, collections, and generics. For example:

<?hh

class MyClass {

public function add(int $one, int $two): int {

return $one + $two;

}

}

As you can see, Hack is very similar to PHP. In fact, Hack is really just an extension of PHP since you can simply begin any PHP code with the <? hh tag to make it a valid Hack file.

So, how do you get started with HHVM and Hack? Unfortunately, Mac OS X and Windows binaries are not provided officially, and though you can install HHVM yourself by compiling it on Mac, it's certainly not the most convenient. An even better way of trying out Hack is to simply use a Linux server. One of my go-to providers for cheap "testing" servers on demand is DigitalOcean, who provides SSD cloud servers starting at $0.007 an hour. Of course, this tutorial applies to any server provider or even a local VM, so you can follow the steps regardless who your provider is.

Booting Up a Server

First, you'll need an Ubuntu server-- preferably 14.04, though any version from 12.04 up will work fine, as does many other flavors of Linux.

On DigitalOcean, you can get started by registering a new account or using your existing one. If you're a new user to DigitalOcean, you may even be able to find a coupon code for $5-$10 in credit (such as the coupon code ALLSSD10, which should be working as of July 2014).

Once you've registered on DigitalOcean, you can launch a new "Droplet" (DigitalOcean's term for a virtual machine or VPS) with the big green "Create" button on the left side of your dashboard.

Go ahead and enter any hostname you want, and choose a server size. You can also choose any Droplet size you wish, including the baseline 512 MB RAM Droplet. If you're planning on running anything in production on this server or wish to have a little more headroom, you may wish to choose the slightly larger 1 GB RAM Droplet.

Next, you can choose the region closest to yourself (or your visitors if you're using this as a production server). DigitalOcean has six different data centers at the moment, including New York, San Francisco, Singapore, and Amsterdam. Different data centers have different features such as private networking and IPv61, though these features are slated to roll out to all data centers at some point in time.

Finally, choose the Ubuntu 14.04 image and create your Droplet. It'll only take around 60 seconds to do so, and once the Droplet is running SSH into the server using the credentials sent to you or your SSH key if you've set up SSH authentication.

Installing HHVM

HHVM is relatively easy to install on Ubuntu, but varies based on your Ubuntu version. The main difference between the commands below is simply the version name when adding the repository to your sources.

Ubuntu 14.04

wget -O - http://dl.hhvm.com/conf/hhvm.gpg.key | sudo apt-key add -

echo deb http://dl.hhvm.com/ubuntu trusty main | sudo tee /etc/apt/sources.list.d/hhvm.list

sudo apt-get update

sudo apt-get install hhvm

Ubuntu 13.10

wget -O - http://dl.hhvm.com/conf/hhvm.gpg.key | sudo apt-key add -

echo deb http://dl.hhvm.com/ubuntu saucy main | sudo tee /etc/apt/sources.list.d/hhvm.list

sudo apt-get update

sudo apt-get install hhvm

Ubuntu 13.04

Ubuntu 13.04 isn't officially supported or recommended to use.

Ubuntu 12.04

sudo add-apt-repository ppa:mapnik/boost

wget -O - http://dl.hhvm.com/conf/hhvm.gpg.key | sudo apt-key add -

echo deb http://dl.hhvm.com/ubuntu precise main | sudo tee /etc/apt/sources.list.d/hhvm.list

sudo apt-get update

sudo apt-get install hhvm

If you've having issues with the add-apt-repository command on Ubuntu 12.04, then you may need to run sudo apt-get install python-software-properties.

Running HHVM

Once you've installed HHVM, you can run it on the command line as hhvm. Once you create a new Hack file with the following contents, try and run it with hhvm [filename.

<?hh

echo "Hello from HHVM " . HHVM_VERSION;

Note the lack of a closing tag-- in Hack, there are no closing tags and HTML is not allowed inline.

Installing and Setting Up Nginx

Of course, installing HHVM for the command line is the easy part. To actually serve traffic to HHVM using Nginx, you have to set HHVM up as a fast-cgi module. To do so, first install Nginx with sudo apt-get install nginx and start it with sudo service nginx start. To verify that Nginx installed correctly, visit your Droplet's IP address and you should see the Nginx default page.

Now, we can remove the default Nginx websites with the following commands:

sudo rm -f /etc/nginx/sites-available/*

sudo rm -f /etc/nginx/sites-enabled/*

Then, create a new configuration file for your website as /etc/nginx/sites-available/hhvm-site. You can change the name of the configuration file if you wish. The contents of the file should be similar to the one of following:

Emulating "mod_rewrite"

The Nginx equivalent of sending all requests to a single index.php file is as follows. Every request to this server will be sent to the index.php file, which is perfect for frameworks such as Laravel.

server {

# Running port

listen 80;

server_name www.example.com;

# Root directory

root /var/www;

index index.php;

location / {

try_files $uri @handler;

}

location @handler {

rewrite / /index.php;

}

location ~ \.php$ {

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

}

Traditional Setup

In this example, any requests to a script ending in .php will be executed by HHVM. For example, if you have hello.php in your web root, navigating to http://www.example.com/hello.php would cause the hello.php file to be executed by HHVM.

server {

# Running port

listen 80;

server_name www.example.com;

# Root directory

root /var/www;

index index.php;

location ~ \.php$ {

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

}

Also, ensure that you change all instances of the web root (/var/www) in the above configuration files to your own web root location, as well as the server_name. Alternatively, you can leave the web root as /var/www and just put your Hack files in that folder.

Now that you've created the file under sites-available, you can symlink it to the sites-enabled folder to enable it in Nginx.

sudo ln -s /etc/sites-available/hhvm-site /etc/sites-enabled/hhvm-site

Before you restart Nginx to apply the changes, start the HHVM fast-cgi enabled server with hhvm --mode daemon -vServer.Type=fastcgi -vServer.Port=9000. After the HHVM daemon is started, you can then run sudo service nginx restart to apply your Nginx configuration changes. If you have a Hack file in your web root, you should be able to visit your Droplet's IP and see the response.

Starting HHVM on Boot

HHVM currently does not automatically start up when your server is restarted. To change this, you can simply add the line below into the file named /etc/rc.local to run it on boot:

/usr/bin/hhvm --mode daemon -vServer.Type=fastcgi -vServer.Port=9000

HHVM should now start when your server boots up.

You should now have HHVM and Hack up and running on your server-- make sure you take a look at Hack's documentation for more information on the features of the language.

- Singapore is the first region with IPv6 support, while New York 2, Amsterdam 2, and Singapore have private networking. ↩

While I've previously gone over development environments using Vagrant and Puppet, recent advancements in LXC container management (see: Docker) and applications that have popped up using this technology have made deploying to staging or production environments easier-- and cheaper.

Heroku, a fantastic platform that allows developers to focus on code rather than server management, has spoiled many with its easy git push deployment mechanism. With a command in the terminal, your application is pushed to Heroku's platform, built into what is known as a "slug", and deployed onto a scalable infrastructure that can handle large spikes of web traffic.

The problem with Heroku is its cost-- while a single "Dyno" per application, which is equivalent to a virtual machine running your code-- is free, scaling past a single instance costs approximately $35 a month. Each Dyno only includes half a gigabyte of RAM as well, which is minuscule compared to the cost-equivalent virtual machine from a number of other providers. For example, Amazon EC2 has a "Micro" instance with 0.615 gigabytes of RAM for approximately $15 a month, while $40 a month on Digital Ocean would net you a virtual machine with 4 gigabytes of RAM. But, with Heroku, you pay for their fantastic platform and management tools, as well as their quick response time to platform related downtime-- certainly an amazing value for peace of mind.

But, if you're only deploying "hobby" applications or prefer to manage your own infrastructure, there's a couple of options to emulate a Heroku-like experience.

Docker, LXC, and Containers

If you've been following any sort of developer news site, such as Hacker News, you've likely seen "Docker" mentioned quite a few times. Docker is a management system for LXC containers, a feature of Linux kernels to separate processes and applications from one another in a lightweight manner.

Containers are very similar to virtual machines in that they provide security and isolation between different logical groups of processes or applications. Just as a hosting provider may separate different customers into different virtual machines, Docker allows system administrators and developers to create multiple applications on a single server (or virtual server) that cannot interfere with each other's files, memory, or processor usage. LXC containers and the Docker management tool provide methods to limit RAM and CPU usage per container.

Additionally, Docker allows for developers to export packages containing an application's code and dependencies in a single .tar file. This package can be imported into any system running Docker, allowing easy portability between physical machines and different environments.

Dokku and Deployment

Containers may be handy for separation of processes, but Docker alone does not allow for easy Heroku-like deployment. This is where platforms such as Dokku, Flynn, and others come in. Flynn aims to be a complete Heroku replacement, including scaling and router support, but is not currently available for use outside of a developer preview. Conversely, Dokku's goal is to create a simple "mini-Heroku" environment that only emulates the core features of Heroku's platform. But, for many, the recreation of Heroku's git push deployment and basic Buildpack support is enough. Additionally, Dokku implements a simple router that allows you to use custom domain names or subdomains for each of your applications.

Digital Ocean is a great cloud hosting provider that has recently gained significant traction. Their support is great and often responds within minutes, and their management interface is simple and powerful. Starting at $5 per month, you can rent a virtual machine with half a gigabyte of RAM and 20 gigabytes of solid state drive (SSD) space. For small, personal projects, Digital Ocean is a great provider to use. Larger virtual machines for production usage are also reasonably priced, with pricing based on the amount of RAM included in the virtual machine.

Another reason why Digital Ocean is great for Docker and Dokku is due to their provided pre-built virtual machine images. Both Dokku 0.2.0-rc3 and Docker 0.7.0 images are provided as of this publication, and in less than a minute, you can have a ready-to-go Dokku virtual machine running.

If you don't already have a Digital Ocean account, you can get $10 in free credit to try it out through this link. That's enough for two months of the 512 MB RAM droplet, or a single month with the 1 GB RAM droplet.

Setting Up the Server

After you've logged into Digital Ocean, create a new Droplet of any size you wish. The 512 MB instance is large enough for smaller projects and can even support multiple applications running at once, though you may need to enable swap space to prevent out-of-memory errors. The 1 GB Droplet is better for larger projects and runs only $10 per month. If you are simply experimenting, you only pay for the hours you use the instance (e.g. $0.007 an hour for the 512 MB Droplet), and Digital Ocean regularly provides promotional credit for new users on their Twitter account. If you follow this tutorial and shut down the instance immediately afterwards, it may cost you as little as two cents. You can choose any Droplet region you wish-- preferably one that is close to you or your visitors for the lowest latency. Digital Ocean currently has two New York, two Amsterdam, and one San Francisco datacenter, with Singapore coming online in the near future. Droplets cost the same in each region, unlike Amazon or other providers.

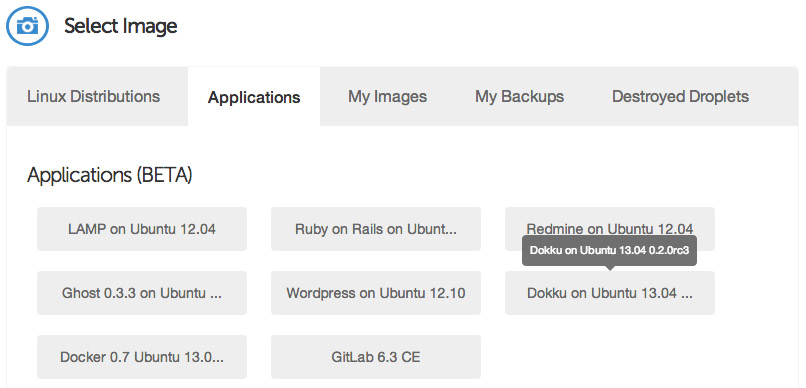

Under the "Select Image" header on the Droplet creation page, switch to the "Applications" tab and choose the Dokku image on Ubuntu 13.04. This image has Dokku already setup for you and only requires a single step to begin pushing applications to it.

Select your SSH key (if you haven't already set one up, you will need to do so before launching your Droplet), and then hit the big "Create Droplet" button at the bottom of the page. You should see a progress bar fill up, and in approximately one minute, you'll be taken to a new screen with your Droplet's information (such as IP address).

Take the IP address, and copy and paste it into a browser window. You'll see a screen popup with your SSH public key, as well as some information pertaining to the hostname of your Dokku instance. If you specified a fully qualified domain name (e.g. apps.example.com) as your Droplet's hostname when you created it, the domain will be automatically detected and pre-filled in the setup screen. If this is the case, you can just check the "use virtualhost naming" checkbox and hit "Finish" and continue to setup your DNS.

However, if you entered a hostname that is not a fully qualified domain name (e.g. apps-example), you'll just see your IP address in the Hostname text box. Enter the fully qualified domain name that you'll use for your server, select the "virtualhost naming" checkbox, and click "Finish Setup". For example, if you want your applications to be accessible under the domain apps.example.com, you would enter apps.example.com in the "Hostname" field. Then, when you push an app named "website", you will be able to navigate to website.apps.example.com to access it. You'll be able to setup custom domains per-app later (e.g. have www.andrewmunsell.com show the application from website.apps.example.com).

In any case, you'll be redirected to the Dokku Readme file on GitHub. You should take a minute to read through it, but otherwise you've finished the setup of Dokku.

DNS Setup

Once your Droplet is running, you must setup your DNS records to properly access Dokku and your applications. To use Dokku with hostname-based apps (i.e. not an IP address/port combination), your DNS provider must support wildcard DNS entires. Amazon Route 53 is a relatively cheap solution that supports wildcard DNS entires (approximate cost of $6 per year), while Cloudflare (free) is another.

To properly resolve hostnames to your Droplet running Dokku, two A DNS records must be set:

A [Hostname] [Droplet IP address]

A *.[Hostname] [Droplet IP address]

For example, if your Droplet is running with a hostname of apps.example.com and you wish to use apps under *.apps.example.com, you would use the following DNS records:

A apps.example.com [Droplet IP address]

A *.apps.example.com [Droplet IP address]

For more information on DNS, see various resources available to you on the Internet, including Cloudflare's documentation on the subject.

Deploying Code

Dokku allows for many of your existing apps, including those built for Heroku, to immediately run on your own Dokku instance. Dokku uses a package called a "Buildpack" to define how your application is packaged for deployment. For example, the PHP Buildpack defines behavior to pull down compiled versions of Apache, PHP, and other dependencies, and perform basic setup to run a PHP application. Similarly, the Node.js Buildpack retrieves dependencies by fetching the Node.js binary, NPM, and all of your application's dependencies as defined in package.json.

To illustrate how Dokku works, we'll create a simple Node.js application that defines dependencies and responds to HTTP requests with "Hello, World!"

Create a new directory on your computer with the following files and contents:

/package.json

{

"name": "dokku-demo-application",

"version": "1.0.0",

"private": true,

"engines": {

"node": ">=0.10.0",

"npm": ">=1.3"

},

"dependencies": {

"express": "~3.0"

}

}

/server.js

var PORT = process.ENV.PORT || 8080;

var express = require("express");

var app = express();

app.use(app.router);

app.use(express.static(__dirname + "/public"));

app.get("/", function(req, res){

res.send("Hello, World!");

});

app.listen(PORT);

/Procfile

web: node server.js

This is a simple example Express application that creates a single HTTP GET route--the root directory /--and responds with a single phrase. As you can see, this Dokku application's structure mirrors Heroku's requirements. The Procfile defines a single "web" command to be started. All Dokku Buildpacks will normally ignore other process types defined in the Procfile.

After you've created the files, create a Git repository using a Git GUI such as Tower or SourceTree, or the command line, and commit the previously created files. You'll also need to define a remote repository-- your Dokku instance. For example, if your Dokku instance was hosted at apps.example.com, you would define an remote of [email protected]:app-name. You can modify the app-name as desired, as this will correspond to the subdomain that your application will be served from.

Once you've added the remote, push your local master branch to the remote's master. If everything is setup correctly, you'll see a log streaming in that indicates Dokku's current task. Behind the scenes, Dokku creates a new Docker container and runs the Buildpack's compilation steps to build a Docker image of your application. If the build succeeds, your application is deployed into a new container and you will are provided with a URL to access your application at. In this example, the application would be accessible at http://app-name.apps.example.com/ and will display "Hello, World!"

Using a Custom Domain

While your application is accessible at the subdomain provided to you after your application is deployed, you may also want to use a custom domain for your application (e.g. api.example.com). You can do this in two ways-- use the fully qualified domain name desired for your application's repository name, or edit the generated nginx.conf file on your Dokku server to include your domain name.

The first method is quite simple-- instead of pushing your repository to [email protected]:app-name, you simply name your app based on your domain. For example: [email protected]:api.example.com.

Alternatively, you can SSH into your Dokku instance using the IP address or hostname and the root user to modify the nginx.conf file for your application. Once you're SSH-ed into your instance, simply change directories to /home/dokku/[application name] and edit the nginx.conf file. For example, the application we pushed ("app-name") would be found at /home/dokku/app-name. To add your own domain, simply add your custom domain name to the end of the server_name line, with each domain separated by spaces. Changes to the domain in this file will not be overwritten on the next git push.

Going Further

As you can see, Dokku is an incredibly powerful platform that mimics Heroku very closely. It provides the basics needed for deploying an application easily, and allows for quick zero-downtime deployments. With a host like Digital Ocean, you can easily and cheaply host multiple applications. Careful developers can even deploy to a separate "staging" application before pushing to the production app, allowing for bugs to be caught before they're in the live environment.

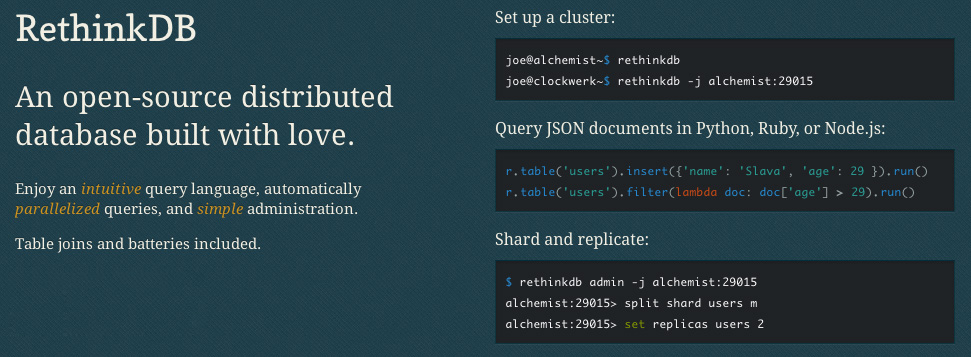

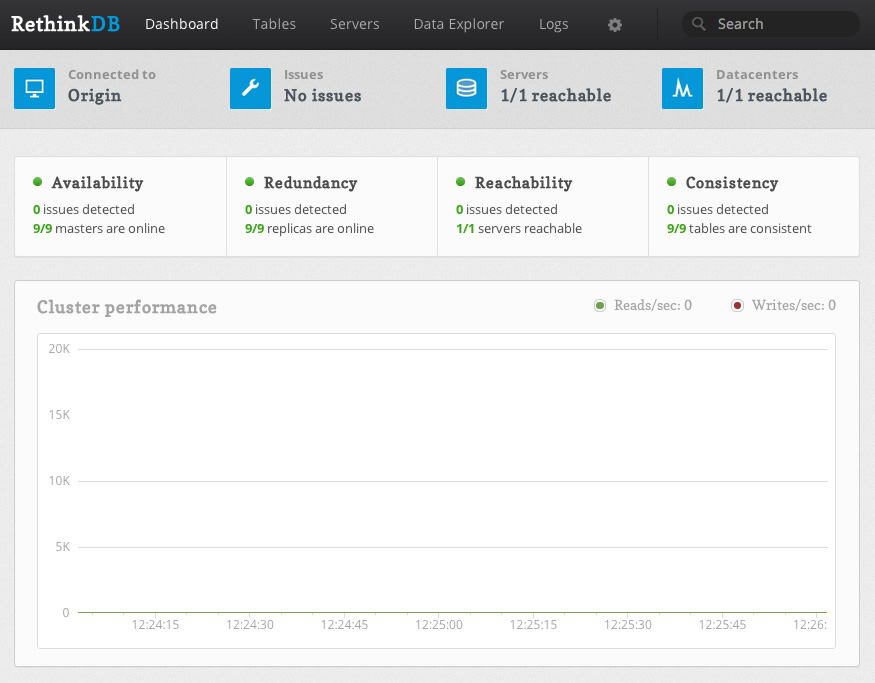

RethinkDB is a distributed document-store database that is focused on easy of administration and clustering. RethinkDB also features functionality such as map-reduce, sharding, multi-datacenter functionality, and distributed queries. Though the database is relatively new, it has been funded and is moving quickly to add new features and a Long Term Support release.

One major issue still remains with RethinkDB, however-- it's relatively difficult to secure properly unless you have security group or virtual network functionality from your hosting provider (a la Amazon Web Services Virtual Private Cloud, security groups, etc.). For example, RethinkDB's web administration interface is completely unsecured when exposed to the public Internet, and the clustering port does not have any authentication mechanisms. Essentially, this means that if you have an exposed installation of RethinkDB, anyone can join your database cluster and run arbitrary queries.

DigitalOcean, a great startup VPS provider, is a cheap means of trying out RethinkDB for yourself. The one issue is, they currently do not provide any easy way of securing clusters of RethinkDB instances. Unlike Amazon's security groups, which allow you to restrict traffic between specific instances, every DigitalOcean VPS can talk to each other over the private network1. Essentially, this would allow any DigitalOcean VPS in the data center to attach itself to your RethinkDB cluster, which is less than ideal.

Because of this, DigitalOcean is not a great host to run a cluster on if you're looking to get up and running quickly. There are ways around this, such as running a VPN (especially a mesh VPN like tinc) or manually adding each RethinkDB's IP address to your iptables rules, but this is a much more complicated setup than using another host that has proper security groups.

However, this doesn't mean that DigitalOcean is a bad host for your RethinkDB database-- especially if you're looking to try out the database or if you're just running a single node (which is fine for many different applications). In this tutorial, we'll go over how to properly setup a RethinkDB node and configure iptables to secure access to the database and web administration interface on DigitalOcean specifically, however this tutorial applies to any VPS or Dedicated Server provider.

Launching a Droplet

The first step you want to take is to sign up for DigitalOcean. If you sign up from this link, you will receive $10 in credit for free. This is enough to run a 512 MB droplet for two months, or a 1 GB RAM droplet for a single month2.

After registering, log into your account and create a new droplet3 on the dashboard. Enter a hostname, choose an instance size4, select the region closest to you5 for the lowest latency, and choose an operating system. For now, "Ubuntu 13.10 x64" or "Ubuntu 13.04 x64" are good choices unless you have another preference. If you wish to use an SSH key for authentication (which is highly recommended), select which key you'd like preinstalled on your Droplet. After you've selected all of the options you'd like to use, click the large "Create Droplet" button at the bottom of the screen.

Installing RethinkDB

Once your instance is launched, you're taken to a screen containing your server's IP address. Go ahead and SSH into it with either the root password emailed to you or with your SSH key if you've selected that option. You should be taken to the console for your freshly launched Ubuntu instance.

To actually install RethinkDB, you'll need to add the RethinkDB Personal Package Archive (PPA) with the command sudo add-apt-repository ppa:rethinkdb/ppa6.

Next, update your apt sources with sudo apt-get update, and then install the RethinkDB package with sudo apt-get install rethinkdb.

Configuring RethinkDB

As of now, you could run the command rethinkdb, and RethinkDB would start up and create a data file in your current directory. The problem is, RethinkDB does not startup on boot by default and is not configured properly for long term use.

To configure RethinkDB, we'll use a configuration file that tells RethinkDB how to run the database. Go ahead and copy the sample configuration into the correct directory, and then edit it:

sudo cp /etc/rethinkdb/default.conf.sample /etc/rethinkdb/instances.d/instance1.conf

sudo nano /etc/rethinkdb/instances.d/instance1.conf

Note that there are two commands above-- if there is a line break inside of the first command, ensure you copy and paste (or type out) the whole thing. This will open up the "nano" editor, though you can substitute this with any other editor you have installed on your VPS.

RethinkDB Configuration Options

The sample configuration file, as of RethinkDB v0.11.3, is included below for reference:

#

# RethinkDB instance configuration sample

#

# - Give this file the extension .conf and put it in /etc/rethinkdb/instances.d in order to enable it.

# - See http://www.rethinkdb.com/docs/guides/startup/ for the complete documentation

# - Uncomment an option to change its value.

#

###############################

RethinkDB configuration

###############################

Process options

User and group used to run rethinkdb

Command line default: do not change user or group

Init script default: rethinkdb user and group

runuser=rethinkdb

rungroup=rethinkdb

Stash the pid in this file when the process is running

Command line default: none

Init script default: /var/run/rethinkdb//pid_file (where is the name of this config file without the extension)

pid-file=/var/run/rethinkdb/rethinkdb.pid

File path options

Directory to store data and metadata

Command line default: ./rethinkdb_data

Init script default: /var/lib/rethinkdb// (where is the name of this file without the extension)

directory=/var/lib/rethinkdb/default

Log file options

Default: /log_file

log-file=/var/log/rethinkdb

Network options

Address of local interfaces to listen on when accepting connections

May be 'all' or an IP address, loopback addresses are enabled by default

Default: all local addresses

bind=127.0.0.1

The port for rethinkdb protocol for client drivers

Default: 28015 + port-offset

driver-port=28015

The port for receiving connections from other nodes

Default: 29015 + port-offset

cluster-port=29015

The host:port of a node that rethinkdb will connect to

This option can be specified multiple times.

Default: none

join=example.com:29015

All ports used locally will have this value added

Default: 0

port-offset=0

Web options

Port for the http admin console

Default: 8080 + port-offset

http-port=8080

CPU options

The number of cores to use

Default: total number of cores of the CPU

cores=2

bind

There are a couple of important entries we need to look at. First of all, is the bind address. By default, RethinkDB will only bind on the local IP address 127.0.0.1. This means that nothing outside of the machine the RethinkDB server is running on can access the data, join the cluster, or see the web admin UI. This is useful for testing, but in a production environment where the database is running on a different physical server than the application code, we'll need to change this.

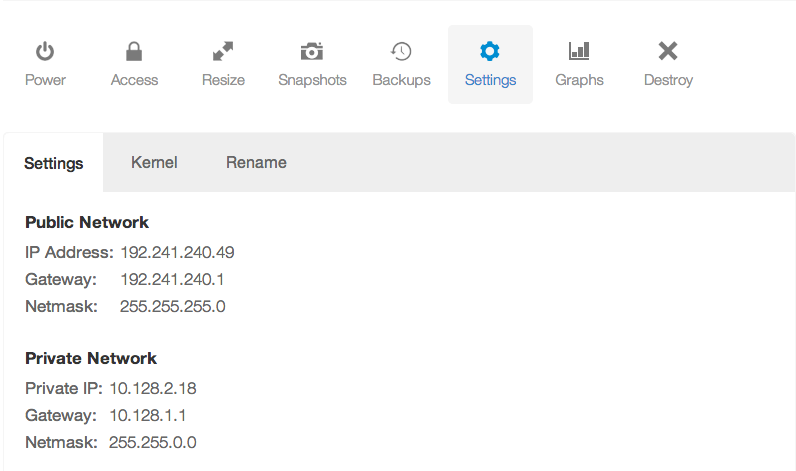

If you've launched an instance in a data center than supports private networking, you can change the bind option to your private IP address7 to start with. For example, if my private IP address is 10.128.2.18, you could use that value for the bind option. Also, make sure you remove the leading hash "#" symbol. This will uncomment the line and make the configuration active. If you want your database to be accessible to the public Internet, you may use your public IP address. Note that there are security ramifications of exposing your RethinkDB instance to the Internet, though we'll address them a little later.

If you wish to bind to all IP addresses-- including public IP addresses--you can use 0.0.0.0.

driver-port, cluster-port

The driver and cluster port options generally should not be modified unless you have a reason to do so. Modifying the ports just so that someone may not "guess" which ports you're using for the RethinkDB instance is not secure-- always assume that someone will find which ports you've configured, and secure your machine appropriately.

http-port

This option configures which port the HTTP administration UI will be accessible on. As with the driver-port and cluster-port, you can change this if the port is already in use by another service.

However, note that the admin UI is not secured in any way. Anyone with access to the admin panel can edit and delete machines from your cluster, and create, edit, and delete database tables and records. However, the admin UI will only be available on the bind address you've configured, so if you've left your bind address as 127.0.0.1, you will only be able to access the admin UI directly from the machine running RethinkDB.

join

The join address will not be used in this lesson, though this option configures which hostname or IP address and port your RethinkDB instance will attempt to join to form a cluster.

Once you've configured all of the options appropriately, you can save the configuration file and start the RethinkDB service:

sudo /etc/init.d/rethinkdb restart

Securing RethinkDB

Now you have RethinkDB running on your server, but it is completely unsecured if your bind address is anything but 127.0.0.1 or another non-accessible IP address. We need to do a couple of things:

- Restrict access to the cluster port so that no other machines can connect to the cluster

- Restrict access to the HTTP admin web UI so that malicious parties cannot access it

- Secure the client driver port so that RethinkDB requires an authentication key, and optionally restrict the port to only allow a specific set of IP addresses to connect

Using iptables to Deny Access to Ports

One method of restricting access to a specific port is through the use of iptables. To block traffic to a specific port, we can use the command:

iptables -A INPUT -p tcp --destination-port $PORT -j DROP

Simply change $PORT to the specific port you'd like to drop traffic for. For example to deny access to the cluster port (since we're not building a cluster of RethinkDB instances), we can use the command:

iptables -A INPUT -p tcp --destination-port 29015 -j DROP

This is assuming that you have not changed the default cluster communication port of 29015. Simply modify the above command to read the same as the "cluster-port" configuration entry if necessary.

Now, we'd also like to deny all traffic to the web administration interface that's located on port 8080. We can do this in a similar manner:

iptables -A INPUT -p tcp --destination-port 8080 -j DROP

However, this command denies access to the web administration UI for everyone-- including yourself. There are three primary ways we can allow you to access the web UI, from most secure to least secure--

- Use an SSH tunnel to access the interface

- Use a reverse proxy to add a username and password prompt to access the interface

- Drop traffic on port 8080 for all IP addresses except your own

Accessing the web administration UI through a SSH tunnel

To access the web administration UI through an SSH tunnel, you can use the following set of commands.

First, we must make the administration UI accessible on localhost. Because we dropped all traffic to the port 8080, we want to ensure that traffic from the local machine is allowed to port 8080.

sudo iptables -I INPUT -s 127.0.0.1 -p tcp --dport 8080 -j ACCEPT

The above command does one thing-- it inserts a rule, before the DROP everything rule, to always accept traffic to port 8080 from the source 127.0.0.1-- the local machine. This will allow us to tunnel into the machine and access the web interface.

Next, we need to actually setup the tunnel on your local machine. This should not be typed into your VPS console, but in a separate terminal window on your laptop or desktop.

ssh -L $LOCALPORT:localhost:$HTTPPORT $IPADDRESS

Replace the $LOCALPORT variable with a free port on your local machine, $HTTPPORT with the port of the administration interface, and $IPADDRESS with your VPS IP address. Additionally, if you SSH into your VPS with another username (e.g. root), you may append "$USERNAME@" before the IP address, replacing $USERNAME with the username you use to authenticate.

Once you've run the above commands, then you should be able to visit localhost:$LOCALPORT in your local web browser and see the RethinkDB web interface.

For a complete example example, the following exposes the RethinkDB administration interface on localhost:8081:

ssh -L 8081:localhost:8080 [email protected]

Using a Reverse Proxy

Because using a reverse proxy involves setting up Apache, Nginx, or some other software on your VPS, it is better to refer you to the official RethinkDB documentation on the subject. The setup steps aren't long, but out of the scope of this tutorial.

If you setup a reverse proxy, make sure you still allow local traffic to the web administration port.

sudo iptables -I INPUT -s 127.0.0.1 -p tcp --dport 8080 -j ACCEPT

Allowing Access for Your IP Address

One final method we'll go over for allowing access to the web UI from yourself is through whitelisting your IP address. This is done in a similar way to allowing local access to port 8080, except with your own IP address instead of 127.0.0.1. After finding your external IP address, you can run the following command on the VPS, replacing $IPADDRESS with the IP address:

sudo iptables -I INPUT -s $IPADDRESS -p tcp --dport 8080 -j ACCEPT

However, I would like to reiterate the insecurity of this method-- anyone with your external IP address, including those on your WiFi or home network, will have unrestricted access to your database.

Allowing Access for Client Drivers

Now that you've allowed yourself access to the web administration UI, you also need to ensure that the client drivers and your application can access the client port properly, and that the access is secured with an authentication key.

Setting an Authentication Key

First and foremost, you should set an authentication key for your database. This will require all client driver connections to present this key to your RethinkDB instance to authenticate, and allows an additional level of security.

On your VPS, you'll need to run two commands-- one to allow for local connections to the cluster port in order to run the administration command line interface, and the other to set the authentication key:

sudo iptables -I INPUT -s 127.0.0.1 -p tcp --dport 29015 -j ACCEPT

Next, we'll run the RethinkDB command line tool:

rethinkdb admin --join 127.0.0.1:29105

This will bring you into the command line administration interface for your RethinkDB instance. You can run a single command, set auth $AUTHKEY, replacing $AUTHKEY with your authentication key.

After you're done, you can type exit to leave the administration interface, or you can take a look at the RethinkDB documentation to see other commands you can run.

If you recall, at this point, the client port (by default, 28105) is still accessible from the public Internet or on whatever interfaces you've bound RethinkDB to. You can increase security to your database by blocking access (or selectively allowing access) to the client port using iptables and commands similar to those listed earlier in the tutorial.

Further Reading

Now that you've setup RethinkDB and secured it using iptables, you can access the administration UI and connect to your instance using a client driver with an authentication key. Though we've taken basic security measures to run RethinkDB on DigitalOcean, it's still recommended to take additional precautions. For example, you may wish to either use a mesh VPN such as tinc to encrypt the database traffic between your clustered instances, if you choose to expand your cluster in the future.

It's also worth reading over the fantastic RethinkDB official documentation for additional instruction on configuring your instance or cluster, or on how to use the administration interface or the ReQL query language8.

- Private networking is only supported in specific data centers at this time, including the NYC 2, AMS 2, and Singapore data centers. ↩

- DigitalOcean's pricing page has a switch that lets you see the hourly or monthly price of their servers. The monthly price is the maximum you can pay per month for that specific server, even if the number of hours in a month times the hourly price is more. For example, a 512 MB VPS is $0.007 per hour or $5 a month. The maximum days in a single month is 31, times 24 hours, times $0.007 per hour equals about $5.21. However, because the monthly price of the VPS is $5, you only pay that amount. ↩

- DigitalOcean calls their VPS servers "Droplets". This is similar to Amazon's "Instance" terminology. ↩

- I highly recommend at least using a 1 GB Droplet if you're planning on actually using RethinkDB or trying it out with large amounts of data. If you just want to check out RethinkDB and see how it works, you can start out with a 512 MB Droplet just fine. ↩

- Remember, you must select a region with private networking (such as NYC 2, AMS 2, or Singapore) if you wish to create a cluster and use the private IP address for cluster communication. This way, you're not billed for cluster traffic. However for a single node, you may choose any data center you'd like. ↩

-

Getting an error saying you do not have the command "add-apt-repository"? If you're running Ubuntu 12.10 or newer, then install it with

sudo apt-get install software-properties-common. Ubuntu versions older than 12.10 should use the commandsudo apt-get install python-software-properties. ↩ -

You can find your private IP address (or public IP address) in your DigitalOcean control panel, under the Droplet you're running, and in the settings tab:

↩

↩

- I realize this is redundant. ↩