Looking Through Glass

Over the course of two days in a relatively quiet area of south Seattle, one of the biggest companies in technology took over a quiet building called Sodo Park.

The space, a small, old looking building, is commonly used for events such as weddings, holiday parties, and other corporate gatherings. From the outside, it wasn't apparent anything was occurring at all-- only a few lone parking signs across the street gave any hint of the company's presence. But as you walked to the front door, flanked by a couple employees in nondescript black T-Shirts, it was apparent that this was more than just a "corporate event."

Stepping inside revealed a large, open space filled with mingling event staff and visitors. After I entered through the door, I was herded to a table at which I was greeted by smiling Google employees sitting behind their Chromebook Pixel laptops. After I filled out a media release form and checked in my jacket, I walked into a short queue to be introduced to the functionality of Glass. Behind me were several clear cases containing prototypes of the technology-- smartphones affixed to glasses frames seemed to be a common theme.

At this point, I had a chance to look around the room. Though I had missed it earlier, employees were walking around the room with Glass on. No matter where you were in the room, you were being watched by tiny cameras mounted on each staff member's head.

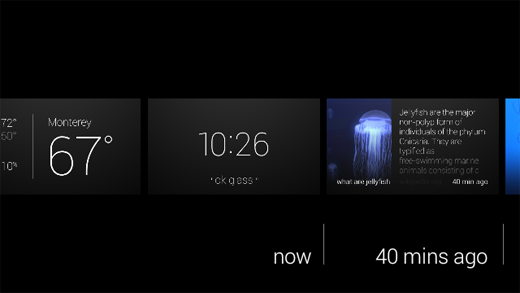

Several minutes later, a friendly woman came over with a Android tablet to welcome the individuals in the queue to the "Seattle Through Glass" event, and gave a quick demonstration of the gestures. The tablet, which was paired to the glasses, displayed a mirrored version of the Glass interface-- everything she saw, we were able to see as well.

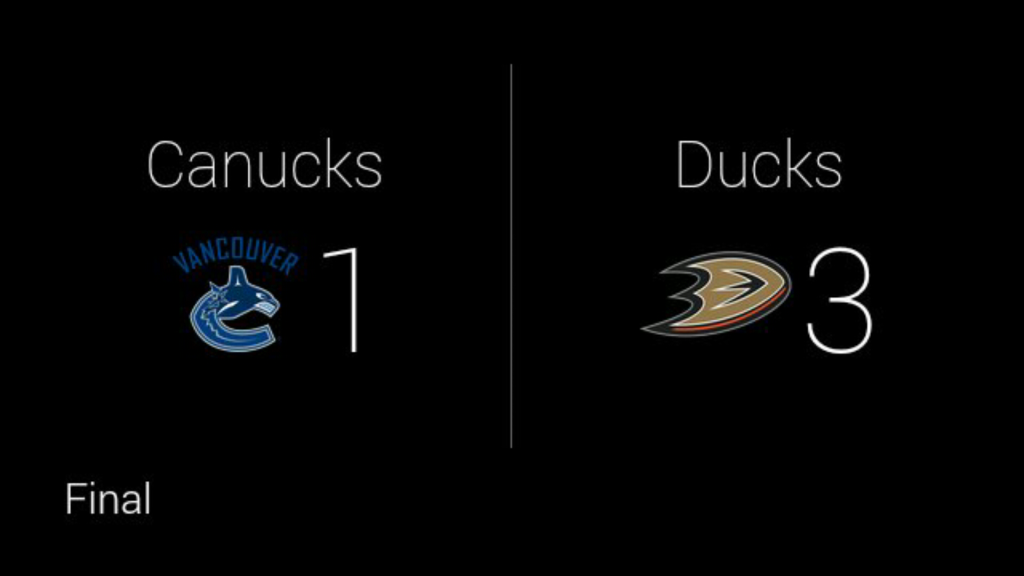

At first, she pulled up sports scores for the Mariners baseball game using the voice interface and showed simple features such as the Timeline. Near the end of the demo, in what seemed to be a shock her audience, the spoke a command-- "Ok Glass, Take a Picture." Immediately, the photo popped up on her Glass display, and in turn was mirrored onto the tablet for us to see. Several individuals were taken aback, surprised by the lack of time to get ready for the photo.

We were then ushered over to a dark corner of the room, and were all provided with white pairs of Google Glass for ourselves to try. After putting the glasses on and adjusting them slightly, I tapped the touch-sensitive panel on the right side of my head and a floating ghost of a display appeared in the corner of my vision.

At first, I was slightly confused-- in all my past experiences, I've never had to think about how to focus on something. After I looked up and to the right, however, the display became clear and in focus.

"1:21 PM"

"ok glass"

After the device was woken up with a tap, there were only two pieces of information displayed-- the first being the current time. Just below the thin, white timestamp was simply the words "ok glass" in quotation marks.

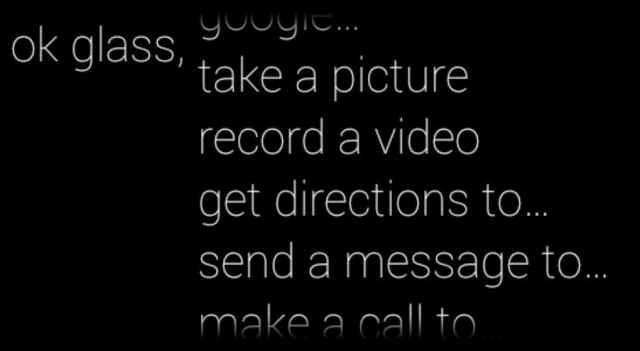

"Ok, Glass," I said.

When you pick up a Pebble smart watch, it immediately has a sense of purpose. Similarly, a Nest has a place and function on your wall-- you know what to do with it. Though modern smart devices have capabilities beyond their traditional counterparts, they always have a sense of purpose-- even it that is to simply display the time.

But with Google Glass, I paused. I didn't know what to do. On my face sat a $1,500 set of computerized glasses-- connected to the internet and one of the largest knowledge engines in the world, none the less, and I couldn't summon up a simple query. I had been overcome with a feeling of blankness-- there wasn't an obvious use for Google Glass, in my mind.

I quickly swiped down on the frame, a "back" gesture that powered the display off again.

Once again, I said, "Ok Glass." But this time, I managed to eek out a simple--if forced--question: "Google, what is the height of the Space Needle?"

The device, with its relatively mechanical voice, returned the answer-- 605 feet.

At that point, Glass felt familiar: the voice was the same used in Google Now, as well as Google's other voice-enabled products. The concept of speaking to your glasses was still alien to me, yet the familiarity of Google Glass's response made it seem like another extension of myself in the same way as my phone always had been.

I tried another query-- "Ok Glass, Google, how far is it from Seattle to San Diego?"

This time, instead of the "Knowledge Graph" card displayed in response to my last query, the glasses popped up with a Google Maps card-- showing walking directions from Seattle to San Diego. While it answered my question (it takes some 412 hours across 1,200 miles, in case you're wondering), the exact response wasn't quite what I was looking for.

I tried taking several photos and sharing them on Google+--a process that was relatively streamlined given the lack of a traditional interface--as well as swiping through past "Cards" that previous demo-ers had summoned in the hours before I arrived. The timeline was filled with several different queries and apps, one of which was CNN. Curious, I tapped on the frame as a news story about Malaysia Air Flight 370 was on screen, and the still photo was brought into motion.

This, admittedly, was one of the demonstrations that awestruck me the most. I felt like some sort of cyborg, able to watch breaking news stories on a virtual screen floating in front of my face. The sound was muddied, and though audible, not high quality. While it was passable in the exhibition room, even with the various conversations going on around me, I am not convinced it would have been loud enough to hear over the noise at a bus or train station.

Having played with the CNN story enough, I once again rattled my brain to think of features to try. Eventually, I settled on simply looking up a historical event. I was brought to a minimalistic list of web search results, though I didn't anticipate I would be able to do much with them.

To my surprise, tapping on a result brought up the mobile Wikipedia page in a full web browser. Sliding my fingers around on the frame manipulated the page. Zooming and panning around was relatively natural feeling, though I could not figure out how to click on links.

With the basics of Glass under my belt, I proceeded to the opposite side of the room-- a slightly brighter, more lively corner decorated with guitars and stereo sets. Along with the acoustic equipment was another table-- this time, with several sets of black Google Glass.

A similar routine to that at the first demonstration area ensued, though with one difference-- the Google staff member pulled out a set of odd looking headphones from out of sight, and plugged them into the micro-USB port on the glasses.

With this newest pair of Google Glass once again on my face, I woke it up and asked it to play "Imagine Dragons." Hooked up to Google Play Music All Access, I was able to command the device to play any song I could imagine-- all with my voice.

There are several inherent flaws with listening to music on Glass, however. First, because there is no 3.5mm headphone jack, there is an unfortunate lack of quality headphones. I own a pair of Klipsch x10 earbuds-- certainly not a set of custom in ear monitors that cost half a grand--but leaps and bounds better than the headphones that are included with your phone or iPod.

The earbuds I was given at the event were specifically designed for use with Glass. Not only because of the micro-USB connector, but the length of one earbud was shorter than the other. This was necessary because the distance from the micro-USB port to your right ear is only several inches, whereas the cable leading to your left ear is significantly longer. Normal headphone cords would simply dangle around your right ear.

Like Apple's EarPods, they had a funny shape designed to project sound into your ear. Also like Apple's headphones, to my dismay, the sound quality was relatively mediocre. It was a step up from the bone conduction speaker that's embedded into the glasses frames, but it's not an impressive feat admittedly.

If you listen to major artists, whether it be Imagine Dragons, Kanye West, or Lady Gaga, you'd have no issues with Google Glass. However, some obscure artists would sometimes fail to be recognized by the voice recognition. For example, it took four or five tries for my Glass to recognize "listen to Sir Sly." Instead of playing the desired artist, Glass would misunderstand me and often attempt to look up an artist named "Siri fly."

As I stood there attempting to enunciate the word "sir" to the best of my ability, it was clear that the technology was fair from ready. It's awkward enough to dictate your music choices out loud, but it's even worse if you have to do it repeatedly. Given the number of odd looks I received from those at the event, imagine the reaction of the people around you if you were riding a bus or train.

Eventually, my frustration overcame my initial awe, and I moved to the final corner of the room.

When I walked in I had noticed this particular setup, though without a clue what it was for. There were several boxes, varying in size, with signs on them in some foreign language-- some artistic exhibit, I imagined. But as I made my trek through the swarms of Google employees swiping their temples on their own set of Google Glass, I realized what the subject of the next demonstration was.

The final booth had the most colorful Google Glass frames of all: a bright, traffic cone-orange. Perhaps it was indicative of the exciting demonstration that was to follow.

With the glasses on, the Google employee instructed me to utter a single voice command:

"Ok Glass, translate this."

Instantly, an app launched on the screen with a viewfinder similar to that of a camera. Essentially, it appeared like Glass provided a picture-in-picture experience. I walked over to an inconspicuous, white board.

"il futuro è qui," read the sign.

In an instant, where the Italian once was, Glass replaced it with the words, "The future is here." No kidding.

The concept of in-place translation is not new. In fact, it's existed for several years on other platforms, such as the Word Lens app on iPhone. The impressive part of the demo wasn't the fact that the translation could be done in place, but rather the fact that the it was the glasses I was wearing doing the translation, and it was projecting the text onto a prism that seemingly hovered in front of me.

I wandered around the demonstration area and looked at each sign, thinking about how useful the technology would have been on my recent trip to Thailand.

After several more minutes, I made my way over to the back of the room where three inconspicuous looking wooden columns had been labeled "Photo Booth." Alongside the columns was another set of tables with two racks of Google Glass: one with lenses, one without, and in four color choices.

After posing for the camera, the friendly Google employee manning the booth printed the photo out and handed it to me.

Having visited all three of the themed demo stations, I collected my belongings, received a poster, and headed back into the Seattle cold. Without Google Glass, I felt oddly primal holding only my cell phone-- having just witnessed one of the more impressive technological demonstrations of the last few years, a handheld device no longer felt adequate. I wanted more than just the ability to retrieve information-- I wanted to summon it.

Glass is an impressive device, though it would be wrong to call it a product. The hardware has the polish-- it's sturdy and lighter than I anticipated-- though it lacks in sex appeal. Glass, to be blunt, looks like a device that belongs in a science fiction movie, not something you'd expect someone to be walking around with in downtown Seattle.

The voice interface is your primary method of input, yet it lacks the accuracy of your fingers. You may find yourself repeating commands often, and if you don't know the pronunciation of a restaurant or venue, you're out of luck entirely. And even if the voice commands do work correctly, you'll likely look around and catch a brief glimpse of the cold glare from strangers sitting next to you. Voice commands may be ideal when you need a hands-free way to convert cups to fluid ounces in your kitchen, but not to check the latest sports scores while you're riding the bus home.

Google has a winner on their hands-- maybe not in its current form, but Glass represents a category of devices that will flood the market in the next several years. As a society, we're always looking for an easier and more intuitive way to consume information, and wearable electronics let us do just that in an inconspicuous manner.

When Glass is launched to the public later this year, we can only hope the current $1500 asking price is lowered dramatically. Especially with the high mental barrier of entry and the "nerd" stereotype emanated by Glass, Google needs to hit a price point of $200 or less to reach beyond their core audience of technophiles.

Even if Glass is only adopted by enthusiasts, this is not necessarily a bad omen, nor does it spell the end of the product. Rather, it should be taken as a sign that Glass is still not quite ready for the general public-- either stylistically or economically.

Google isn't primarily in the hardware business and its livlihood doesn't depend on Glass. They have the freedom and resources to turn the glasses, or wearable electronics in general, into a mainstream product. After all, imagine what sort of data they could glean from the public if every man, woman, and child in the world had an additional few sensors on their body.

I, for one, look forward to a future in which every device I own is networked-- the Internet of Things pushed to the extreme, and Google's "Seattle Through Glass" event only made me even more excited.